Recently I was looking back at the origins of our work here at Mojolab and I came across an old pair of documents from 2006. At the time I was a fresh engineering graduate working two jobs and trying to get a fellowship at Stanford to work on building what I called at the time, an “Alternative Information Architecture” for Chhattisgarh, which was a focus area for two generations of my family. At the time, this is the definition we had of an “alternative network” –

Introduction to Alternative Networks

For any community aiming to share information efficiently with a view to participation in

development, an efficient communication network is mandatory. Communication networks are

usually perceived as being a set of hardware to perform the physical transfer of data and a set of

protocols, which govern the transfer. However, in most definitions of communication networks the user’s significance is somewhat suppressed. The individual using the network is perceived as an external agency, not really a part of the network. The developments that may occur as a result of human interaction with the system are also seldom taken into consideration at the time of design. The result is that the benefits from incorporating each user of the network as a network

component are overlooked. Alternative networks, which aim to diverge from the existing traditional network models, can be of many types. The common factor among all such networks is that they aim to de-centralize and make informal the process of communication.

What’s interesting to note is that while I was able to articulate that the type of network needed would be an “alternative”, I really didn’t have a clear articulation of why it was an alternative or what it was an alternative to. If I was to rephrase myself today, I would call it a hybrid mesh network consisting of multiple channels at multiple layers/levels of the network each connecting to one or many nodes as an alternative to a network of stars or a tree with clearly laid out hierarchies.

The means of change from a hierarchical model to a networked peer driven one would be superseding rather than breaking the hierarchies already present by creating new interlinks complementary to the existing ones.

The notion of incorporating the user would remain as is, though I would further elaborate that the role of the user would be to simply increase his/her own data acquisition and dissemination capability by learning more skills. The measures of this could be the number of channels that a user is adept at using for communication.

At the time, the essential or defining characteristics that I could come up with for any such alternative network were as follows:

Definition of an Alternate Information Architecture

The most attractive fact about an alternative architecture is that it is extremely conducive to

customization.

The primary concepts that this alternative architecture will use are

• Layering

• Self Organization

• Self Sustenance

• Robustness and Self Healing

• Collective Intelligence

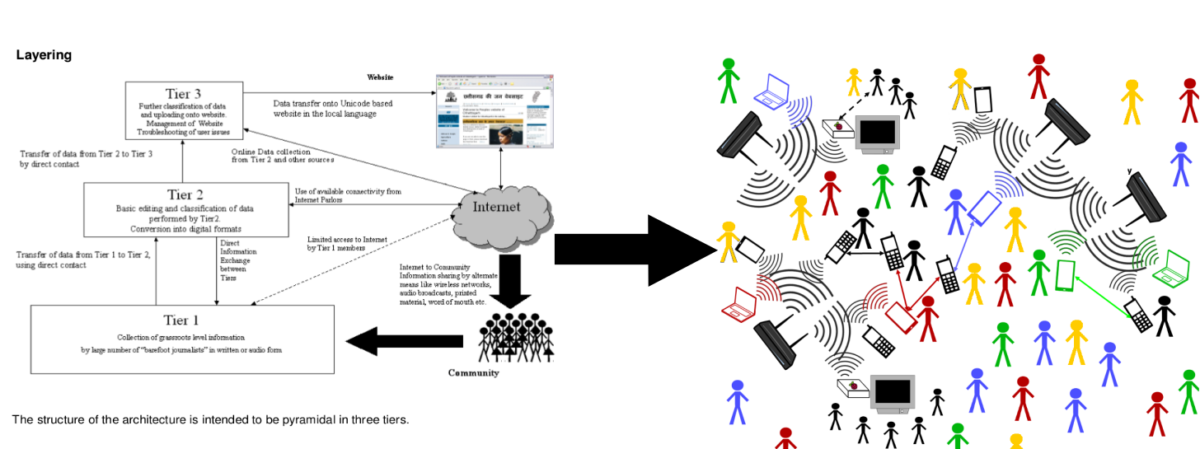

In hindsight, the layering model I had in mind at the time was somewhat limited by my limited field experience. As a result, the descriptions of the layers below end up taking a rather hierarchical form, which is counterproductive to the goal of decentralization.

Tier 1, Information Gathering

Grassroots level information gathering, by “barefoot journalists”, who would be trained minimally.

The data at this stage could be in audio form, or, if the “barefoot journalist” is educated to some

basic level, then written formats could also be used.

The skills required by the members of this tier would typically include: a) an ability to recognize

information relevant to the community, b) self-motivation and enthusiasm and c) basic aptitude

towards learning.

The members at his tier could also be involved in making efficient use of the oral tradition of the

region to disseminate information.

Tier 2, Compilation and basic editing

Compilation of information gathered by “barefoot journalists” into digital form and basic editing.

This would involve conversion of audio material into text for dissemination on the Internet, basic

editing on the text matter to ensure that relevance and consistency is maintained and finally

categorizing the information. The skills required by members at this level would be a) ability to

use a computer with reasonable proficiency b) ability to analyze large amounts of data c) basic

aptitude for editing d) ability to recognize relevant information

Since this tier is intended to be involved in basic editing and compilation, a fundamental level of

error checking would be required. Also on the information dissemination side, this tier would be

responsible for some routing of the information to the various sections of the community.

The members at this tier would also be responsible for spreading awareness about the usage of

the architecture to obtain information. They would be required to demonstrate the website use to

other members of the community. In addition to this, they could be involved in the dissemination

of audio-visual content in areas where literacy and connectivity is low.

Tier 3, Planning, Management and Dissemination

Collection of compiled data from Tier 2, classification of the data into different sections for easy

user access and dissemination through the CGNet website. This level would involve some degree

of technical know-how. The skills required for this tier would include

- ability to create and manage web content,

- basics of local language computing and

- ability to present information in a manner that can be effectively received and used by the community.

This tiered architecture was in fact implemented in the form of CGNet Swara by 2010, where the Tier 1 participants reported and listened to content using mobile phones, which require a minimal amount of training to use effectively. Tier 2 consisted of a verification and moderation network and Tier 3, i.e. the online tier was social media.

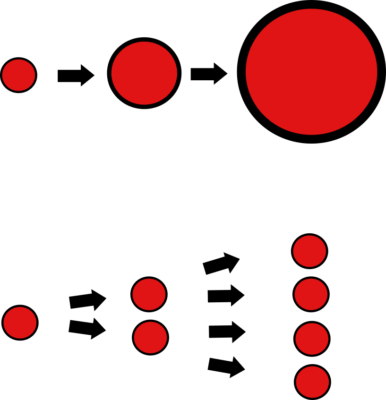

This implementation was key in helping us understand the inherent limitations of the hierarchical layering structure. As the diagram below shows, the flow of information in a hierarchical structure is vertical.  While this does not prevent horizontal information flows, it also does not acknowledge or leverage them. As a result, the costs of information transfer in a hierarchical system rise as the system scales since all information must first rise from the bottom to the top and then descend again, ostensibly in a filtered and value added form. However, the cost benefits of such value addition must be compared to the cost benefits of horizontal information transfer where members are free to share information irrespective of their position in the hierarchy and the “quality” and “credibility” of a source is based on peer review rather than hierarchical accreditation.

While this does not prevent horizontal information flows, it also does not acknowledge or leverage them. As a result, the costs of information transfer in a hierarchical system rise as the system scales since all information must first rise from the bottom to the top and then descend again, ostensibly in a filtered and value added form. However, the cost benefits of such value addition must be compared to the cost benefits of horizontal information transfer where members are free to share information irrespective of their position in the hierarchy and the “quality” and “credibility” of a source is based on peer review rather than hierarchical accreditation.

The other issue with the model as described in the paper is that it assumes a uniform access to the Internet. Considering that I wrote this in 2006 and Internet access still remains very fragmented even 10 years later, clearly shows the fallacy of this assumption. Further more, while the model takes into account the effort in curating incoming information from the grassroots, it does not acknowledge or measure the effort in sending information to the grassroots.

Given the condition of Internet penetration and the asymmetry of useful information vs noise on the Internet, the data dissemination cycle needs at least as much effort as the data acquisition cycle.

When one takes this into account, it becomes evident that any entity that implements equal rigor in data collection and dissemination can only be a peer with the other elements of the network and the efficacy of an entity as a network member would be measured not by hierarchical certification but by the number of channels it operates in. In other words,  the credibility of an information source would be based on number of people it interacts with and number of people who interact with it, rather than by how effectively it talked about its “processes” and how many people it “certified”.

the credibility of an information source would be based on number of people it interacts with and number of people who interact with it, rather than by how effectively it talked about its “processes” and how many people it “certified”.

The result will likely be much messier…but much more colorful!

The full paper and abstract are linked below. I will be posting further updates to this train of thought as the analysis continues.